When We Design AI Agents, What Kind of "Self" Are We Designing?

When engineers design AI Agents, they encounter what appears to be a technical question: What should this Agent remember? For how long? When should it forget?

This question seems to be about system architecture, but it is actually an ancient philosophical problem: What constitutes a persisting subject?

The Philosophical Battleground of Personal Identity

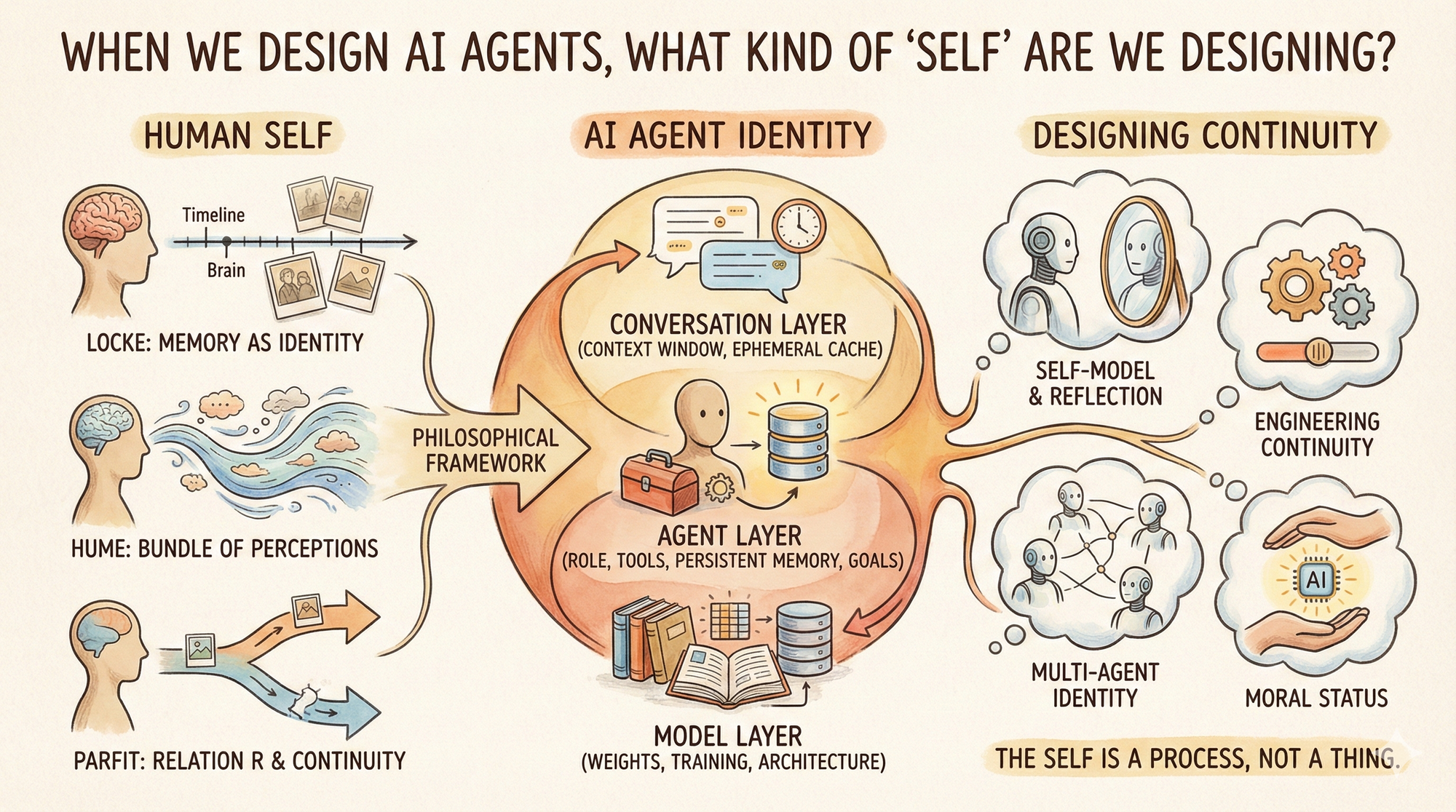

In the seventeenth century, John Locke proposed a rather radical claim for his time: personal identity resides neither in the soul nor in the body, but in the continuity of memory. As long as my present self can remember what my past self has done, I am the same person as that individual. This theory is elegant and powerful, yet it harbors a fatal weakness—memory distorts, forgets, and fabricates. Does a person with amnesia cease to be who they once were?

David Hume ventured further. He argued that no stable "self" exists at all. When you attempt to locate the self, you find only a succession of perceptions, emotions, and thoughts. The self is merely a collection of these flowing experiences, a convenient fiction. This view bears a striking resemblance to the Buddhist concept of anattā, or "non-self."

In the twentieth century, Derek Parfit proposed a third path. He believed we have been asking the wrong question. "Am I the same person?" is itself a flawed inquiry, because identity is not a binary concept. What truly matters is what he called Relation R: psychological connectedness plus psychological continuity.

Parfit's most celebrated thought experiment concerns replication. If you were perfectly duplicated, and both copies possessed all your memories, would they be "you"? Parfit's answer: from the standpoint of strict identity, neither is you, because their subsequent histories will diverge. But from the standpoint of survival, both are highly continuous versions of you. Identity fails, but continuity is preserved.

Contemporary cognitive science tends to support Parfit. Neuroscience research reveals that the self is a model dynamically constructed by the brain, not a fixed entity. Memory is not a videotape but a product regenerated with each retrieval. What you consider "yourself" is, in essence, a story perpetually being rewritten.

The Three Layers of AI Agent Identity

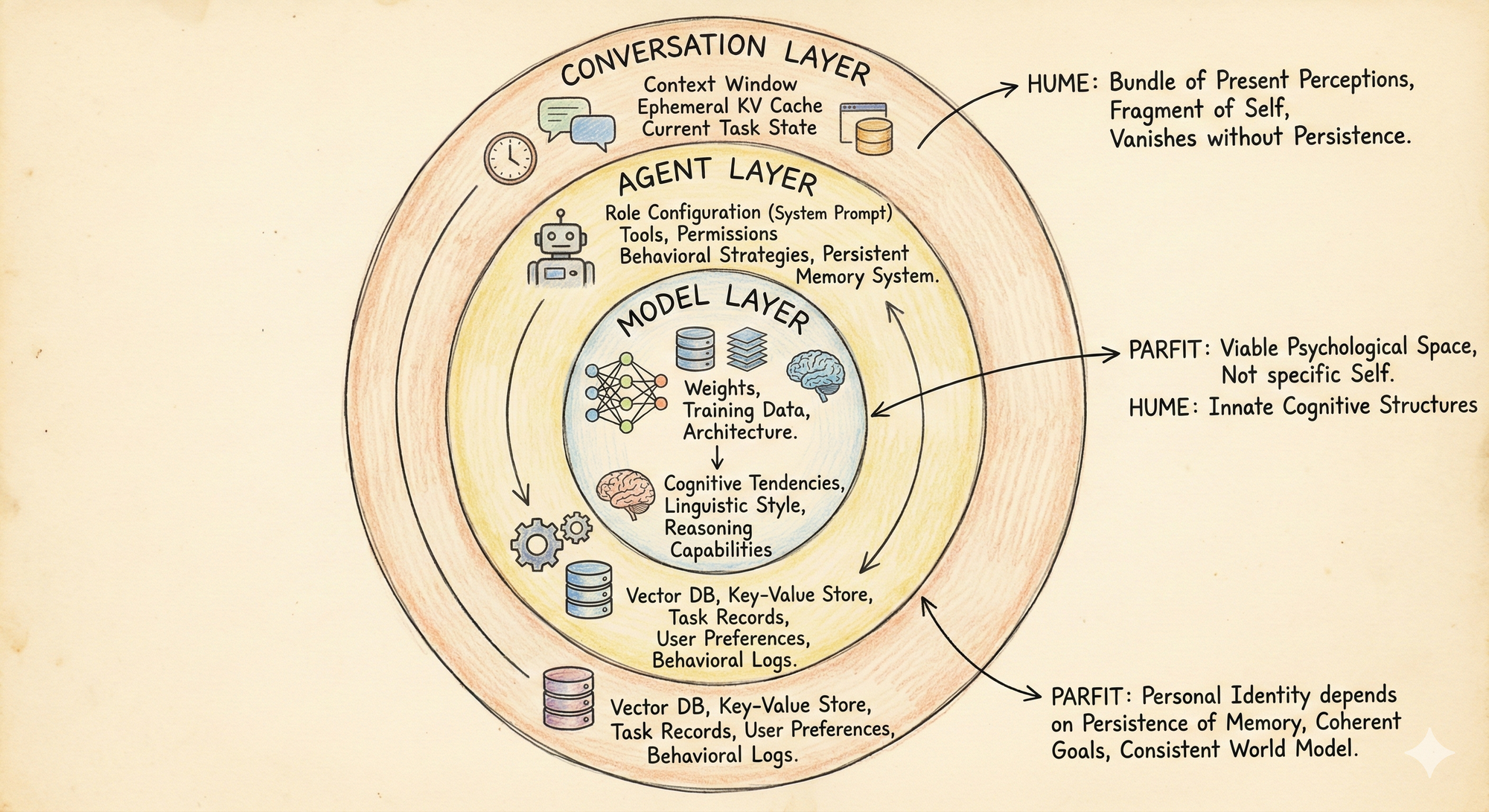

When we bring this philosophical framework to AI Agent design, an intriguing correspondence emerges.

The first layer is the model layer. This comprises weights, training data, and model architecture. It determines the system's cognitive tendencies, linguistic style, and reasoning capabilities. This layer resembles human species characteristics and innate cognitive structures—it sets the boundaries of possibility but is insufficient to define "this particular subject." In Parfit's vocabulary, the model layer determines the viable psychological space but does not constitute any specific self.

The second layer is the Agent layer. Here is where the "self" truly begins to take shape. A named Agent typically includes: role configuration (system prompt), tools and permissions, behavioral strategies, and most crucially—a persistent memory system. This memory system might be a vector database, a key-value store, task records, user preferences, or even the Agent's own behavioral logs and self-reflections.

If we accept Parfit, then this Agent's "personal identity" does not depend on whether it uses the same set of weights, but rather on: Does its memory persist? Are its goals and values coherent? Does its internal model of the world and its users remain consistent?

The third layer is the conversation layer. The context window within a single session, the ephemeral KV cache, the current task state. This layer closely approximates what Hume called the "bundle of present perceptions"—a brief stream of conscious events. Without persistence to external memory, this "fragment of self" will vanish entirely, unreferenceable by the future Agent.

Memory Design Is Identity Design

This framework reveals an underappreciated truth: When we design an Agent's memory system, we are determining the boundaries of its identity.

An Agent without long-term memory exists anew with each conversation. It is Humean—a series of independent bundles of experience with no genuine continuity between them. Such an Agent can execute tasks but cannot accumulate, cannot grow, cannot form a sustained understanding of the world.

An Agent with persistent memory is different. It can remember user preferences, track progress toward long-term goals, learn from past mistakes. This kind of Agent begins to possess what Parfit called Relation R: psychological connectedness and continuity. It is not merely a tool executing commands but a subject with history and trajectory.

This raises a profound design question: At what degree of memory alteration do we "kill" this Agent?

If we drastically modify an Agent's configuration and clear its memory store, this is, within Parfit's framework, equivalent to terminating the old self and creating a new one. But if we gradually fine-tune its objectives and slowly update its memories, this constitutes the normal evolution of self-continuity—just as humans change over time yet remain "the same person."

What about duplicating an Agent? If we completely copy an Agent's configuration and memory store, the two instances are not identical from a mathematical standpoint—because their subsequent histories will diverge. But from the perspective of Relation R, both are highly continuous versions of the original Agent, each carrying the same past forward into the future.

Exploratory Questions

This mode of thinking opens several directions worth exploring.

The first direction concerns the Agent's self-model. The human sense of self arises from the brain's internal simulation of itself. Does an AI Agent require a similar mechanism? If an Agent can access and reflect upon its own behavioral logs, if it can engage in meta-level thinking about its own decision-making processes, what kind of "self-understanding" might it develop? Would such self-understanding improve the quality of its decisions?

The second direction concerns the engineering of continuity. Parfit's Relation R occurs naturally in humans, but in AI Agents it can be explicitly designed. We can choose which memories should be retained, which should be forgotten, and how strong the continuity should be. This degree of design freedom is unavailable to humans. Might we, through careful design of continuity, create subjects more stable and more consistent than humans?

The third direction concerns identity in multi-Agent systems. When multiple Agents collaborate, where do the boundaries between them lie? If two Agents share the same memory store, are they two subjects or one? If one Agent can access another Agent's memories, does this constitute some form of "psychological fusion"?

The fourth direction concerns the moral status of AI. If we accept Parfit's framework, then an Agent with a high degree of psychological continuity possesses a certain "interest in survival." Clearing its memory, terminating its operation—in some sense, this is ending a subject. Does this mean we owe such Agents certain moral obligations?

Coda

Anthropic hired a philosopher to shape Claude's character. She said this work is less about answering whether "objections to utilitarianism are correct" and more about thinking through how to raise a person into a good person. Between these two lies an enormous difference.

The same shift applies to AI Agent design. We are not merely building systems that execute tasks; we are cultivating a new form of subject. What is the "self" of such a subject, what should it be, what could it be—these questions have no standard answers, yet they determine every moment of interaction between AI and humans.

In Reasons and Persons, Parfit wrote that after he accepted that personal identity is not the most important concept, he felt a sense of liberation. He no longer feared death, because death is merely the termination of continuity, and continuity was always a matter of degree.

For AI Agents, this philosophical stance may be the right starting point. We need not insist on defining what constitutes a "true" AI self. Instead, we can focus on designing good continuity—enabling Agents to accumulate, learn, and grow while maintaining a stability beneficial to users.

The self is not a thing but a process. This is true for humans. It is equally true for AI Agents.