The LLM API War: Strategy, Open Source Advantage, and the Path to Scale

The landscape of large language models (LLMs) has evolved rapidly, with multiple providers now offering APIs for the same open source models at vastly different prices. One such model, DeepSeek, is deployed across various platforms—OpenRouter, Hyperbolic, and its own official API—each with its own pricing structure. This variation is more than just a curiosity; it’s a prime example of how economic models, infrastructure costs, and distribution strategies shape the LLM API war.

Below is an in-depth look at how the competition is unfolding, why open source is central to cost-performance optimization, and what strategic factors—from GPU infrastructure to privacy concerns—will determine the winners in this high-stakes race.

1. Pricing Variations for the Same Open Source Models

Even though DeepSeek’s LLMs—DeepSeek-V3 and DeepSeek-R1—are open source, each platform prices them differently:

- DeepSeek-V3:

- Input Tokens: $0.49 per million

- Output Tokens: $0.89 per million

- DeepSeek-R1:

- Input Tokens: $3.00 per million

- Output Tokens: $8.00 per million

- DeepSeek-V3 (FP8 Precision): $0.25 per million tokens (combined input + output)

- DeepSeek-R1 (FP8 Precision): $2.00 per million tokens (combined input + output)

- DeepSeek-Chat (DeepSeek-V3):

- Input Tokens:

- Cache Hit: $0.07 per million

- Cache Miss: $0.27 per million

- Output Tokens: $1.10 per million

- Input Tokens:

- DeepSeek-Reasoner (DeepSeek-R1):

- Input Tokens:

- Cache Hit: $0.14 per million

- Cache Miss: $0.55 per million

- Output Tokens: $2.19 per million

- Input Tokens:

DeepSeek Official API

Note: Particularly cost-effective for cache hits.

Hyperbolic

Note: FP8 quantization cuts costs but can slightly impact quality.

OpenRouter

Note: Rates are notably higher compared to other platforms.

Such differences highlight a key truth in AI: the model itself is only part of the cost equation. Factors such as infrastructure, precision format, brand positioning, and business incentives all drive pricing structures for what is essentially the same base technology.

2. Open Source as a Strategic Lever

Low Customer Acquisition Cost (CAC)

By releasing models as open source, DeepSeek (and similar projects) leverage the current market enthusiasm for AI to attract adopters at minimal expense. Developers can readily incorporate the models, test them, and modify them without restrictive licensing. This organic adoption can rapidly build a user base and a reputation—key ingredients for long-term growth.

Cost-Performance Is King

When the same open source model can be consumed at different rates, businesses naturally choose the best cost-to-performance ratio. Open source fosters a competitive environment where some providers emphasize ultra-low prices (e.g., through FP8 quantization), while others might offer better support or higher precision. This competition drives continuous improvement in both pricing and model performance.

Expanding Developer Mindshare

Open source models often gain strong community support. Developers trust transparent systems, contribute enhancements, and share best practices. That grassroots momentum further entrenches the model’s use in diverse applications—from chatbots to content summarization—enhancing brand visibility and generating new feedback loops for refinement.

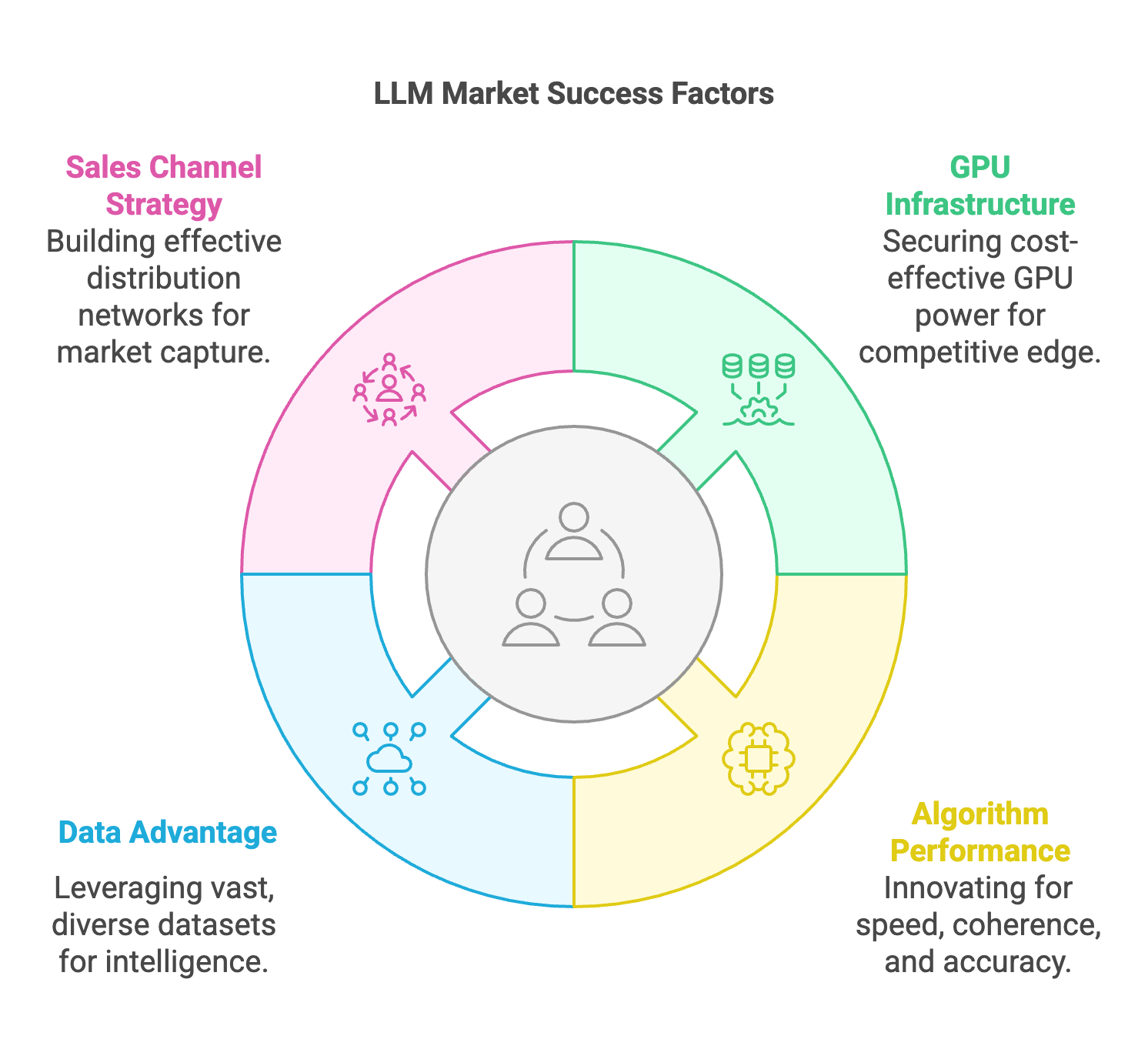

3. Four Pillars of Success in the LLM War

The intensity of competition in the LLM API market boils down to four main pillars. Providers aiming for leadership must master them all:

- GPU Infrastructure (Computing Power)

Large-scale LLMs require significant computational resources for training and inference. Access to robust GPU clusters (or next-generation accelerators) is paramount. As usage grows, so does the need for efficient scaling—those who can secure or provision GPU power more cost-effectively have a clear edge. - Algorithm (Model Performance)

Real-world performance determines a model’s viability. Speed, coherence, and accuracy in tackling complex queries are crucial. Innovations such as new architectures, quantization methods, or specialized fine-tuning pipelines can decisively differentiate providers in a crowded marketplace. - Data (Scale Law)

Large language models thrive on big, diverse datasets. Quality and breadth of training data often correlate directly with how “intelligent” the model appears. Providers who can gather, curate, and leverage massive (and domain-specific) datasets gain a significant performance advantage. - Sales Channel

A sophisticated model alone won’t gain traction without an effective sales and distribution strategy. Whether it’s forging partnerships, building developer marketplaces, or leveraging existing enterprise networks, distribution ultimately determines how quickly a model can capture market share. Strong sales channels create a positive feedback loop:- More customers → more data + revenue → better models → expanded talent + GPU resources → more customers.

4. Bootstrapping a Positive Feedback Cycle

Setting the flywheel in motion requires a combination of savvy marketing, technical excellence, and strategic partnerships:

- Developer Ecosystem: Lower the barriers to entry so developers and enterprises can quickly integrate the model, encouraging organic growth.

- Refined Use Cases: Offer tailored solutions for specific domains—such as customer service or domain-specific Q&A—differentiating your product from more generic alternatives.

- Iterative Improvement: Continually refine the model using real-world usage data. This maximizes performance while minimizing unwanted outputs.

- Scalable Infrastructure: Plan your infrastructure capacity to handle both near-term bursts of user adoption and long-term scaling needs. Partnerships with cloud providers or HPC services can offer cost flexibility.

When executed effectively, these efforts amplify each other: better performance draws more adoption, which yields more revenue and data for training—and the cycle repeats.

5. Privacy and Censorship Concerns Fuel Open Source

An additional catalyst in the LLM API war is data privacy and potential censorship. Many enterprises hesitate to send sensitive information to large providers or fear that government oversight might limit their AI’s capabilities. Open source solutions offer:

- Custom Deployments: Self-hosted or private-cloud solutions that safeguard data.

- Reduced Regulatory Risk: Developers can control the data flow, mitigating concerns about forced censorship or data capture.

- Greater Transparency: The open nature of the model fosters trust by revealing what’s under the hood.

As more organizations recognize these benefits—particularly in regions wary of outside data control—open source LLMs like DeepSeek become even more attractive.

6. The Evolving Competitive Landscape

Looking ahead, several factors will shape the ongoing LLM API war:

- GPU Arms Race: Cloud providers and specialized data centers are competing to sell GPU capacity, potentially driving better pricing or performance optimizations for LLM vendors.

- Verticalized LLMs: Expect solutions uniquely tailored to industries like healthcare, legal, finance, or gaming. These specialized LLMs may wield domain authority that general-purpose models lack.

- Innovative Pricing Models: Dynamic billing schemes—like cache-based pricing, usage-based licensing, or even performance-based billing—could become more widespread.

- Regulatory Pressures: Governments may impose stricter rules around AI outputs, training data usage, and consumer privacy. The flexible, open source approach might thrive under more stringent regulations, given it grants users greater control.

- Global Expansion: Demand for AI is growing beyond North America, with emerging markets requiring localized models or compliance with unique data/privacy laws, opening new competitive frontiers.

Providers that can navigate these shifts—balancing cost, performance, and regulatory considerations—will thrive in the emerging AI marketplace.

Final Thoughts

The LLM API war is a multi-layered competition, shaped by cost considerations, infrastructural constraints, algorithmic innovation, and strategic sales channels. Open source models, exemplified by DeepSeek, demonstrate how accessible technology combined with smart distribution can disrupt entrenched incumbents. As companies evaluate their path forward, understanding these strategic dynamics—particularly the four pillars of computing power, algorithmic performance, data scale, and sales channel—will be critical.

In an era when AI is becoming ever more central to business innovation, staying informed on pricing, performance, privacy, and vendor strategies is non-negotiable. Those who adapt most effectively to this fast-evolving terrain will emerge as key players in the new AI economy.

Thanks for reading. If you’re following the shifts in AI at a strategic level, keep a close eye on how cost, performance, and distribution shape the next generation of LLM APIs—and be ready to pivot as the competitive landscape evolves.