PictoTales Series 3: My Journey Adapting to Modern Tech Stacks and AI-Driven Development

Welcome to my indie hacker blog series where I share the ups and downs of building Pictotales in public. My hope is to provide both a personal reflection on catching up with rapidly evolving technologies and a strategic view that other creators can draw inspiration from. In this post, I’ll dive deep into how I chose a modern serverless tech stack, the role of AI-powered coding tools in my workflow, and my philosophy on evolving alongside new technologies.

Part 1: The Struggle to Catch Up

From iOS and Django to Modern Full-Stack Development

When I started out as an iOS developer at iCHEF, I built and maintained iPad-based POS systems. Eventually, I moved on to designing internal operation systems in Django (Python) for CRM and subscription management. Both iOS development and Django offered solid foundations; however, they didn’t prepare me for the rapid changes in frameworks, cloud services, and AI tooling that would emerge in recent years.

After my transition into a leadership role as Chief Strategy Officer at iCHEF, I found myself focusing on high-level decision-making. I wasn’t actively coding in newer, cutting-edge frameworks, which made returning to hands-on development daunting. When I decided to build Pictotales, I realized I had a lot to relearn—React, serverless infrastructure, Next.js, tRPC, AI frameworks—the list felt endless. But one principle guided me: stay open to learning and embrace modern tools that can reduce friction for a small (or solo) developer team.

Part 2: Choosing a Modern Serverless Tech Stack

Why Serverless and Why Now?

As a one-man developer team juggling multiple responsibilities—product design, coding, marketing, and beyond—I needed a stack that was both scalable and low-maintenance. The serverless approach made perfect sense:

- Minimal Ops Overhead

Platforms like Zeabur reduce the operational load. I don’t need to maintain my own servers or worry about scaling once traffic spikes and the initial cost is super low. - Modular and Cloud-Native

Using Supabase for Postgres, storage, and authentication made it easy to spin up a fully managed database solution. Integrating that with Next.js (for the frontend) and FastAPI + DSPy (for AI/stateless services) results in a seamless, modern toolchain. - Easy Parallelization and Scheduling

With Qstash Workflow, running parallel tasks or scheduled jobs becomes straightforward, which is vital when dealing with AI workflows that can be resource-intensive.

Part 3: The Power of Quick-Start Solutions

supastarter for Next.js as a Coding Context

Time is of the essence when you’re a solo founder. Rather than reinvent the wheel, I invested in a robust “quick start” solution: supastarter for Next.js. Here’s why:

- Battle-Proofed Configuration: It’s already set up with Next.js, Prisma, Tailwind, and more. I didn’t have to spend days configuring each piece from scratch.

- Optimized Developer Experience: This starter kit comes with best practices built in. By adopting a standard code structure, it also becomes easier for AI coding assistants to parse and generate relevant suggestions.

- Excellent Reference for AI Tools: When you feed AI pair-programming tools like GitHub Copilot or AI editors (Cursor, Aider, WinSurf) a well-structured, proven codebase, they can provide more accurate and context-aware completions.

Tip for Beginners:

If you’re new to a tech stack, a reputable starter kit can help you stand on the shoulders of experts. Combine that with a detailed PRD (Product Requirements Document), and your AI assistant will be more powerful.

Part 4: Learning Through Your AI Copilot

Accelerating Development with Context and Vision

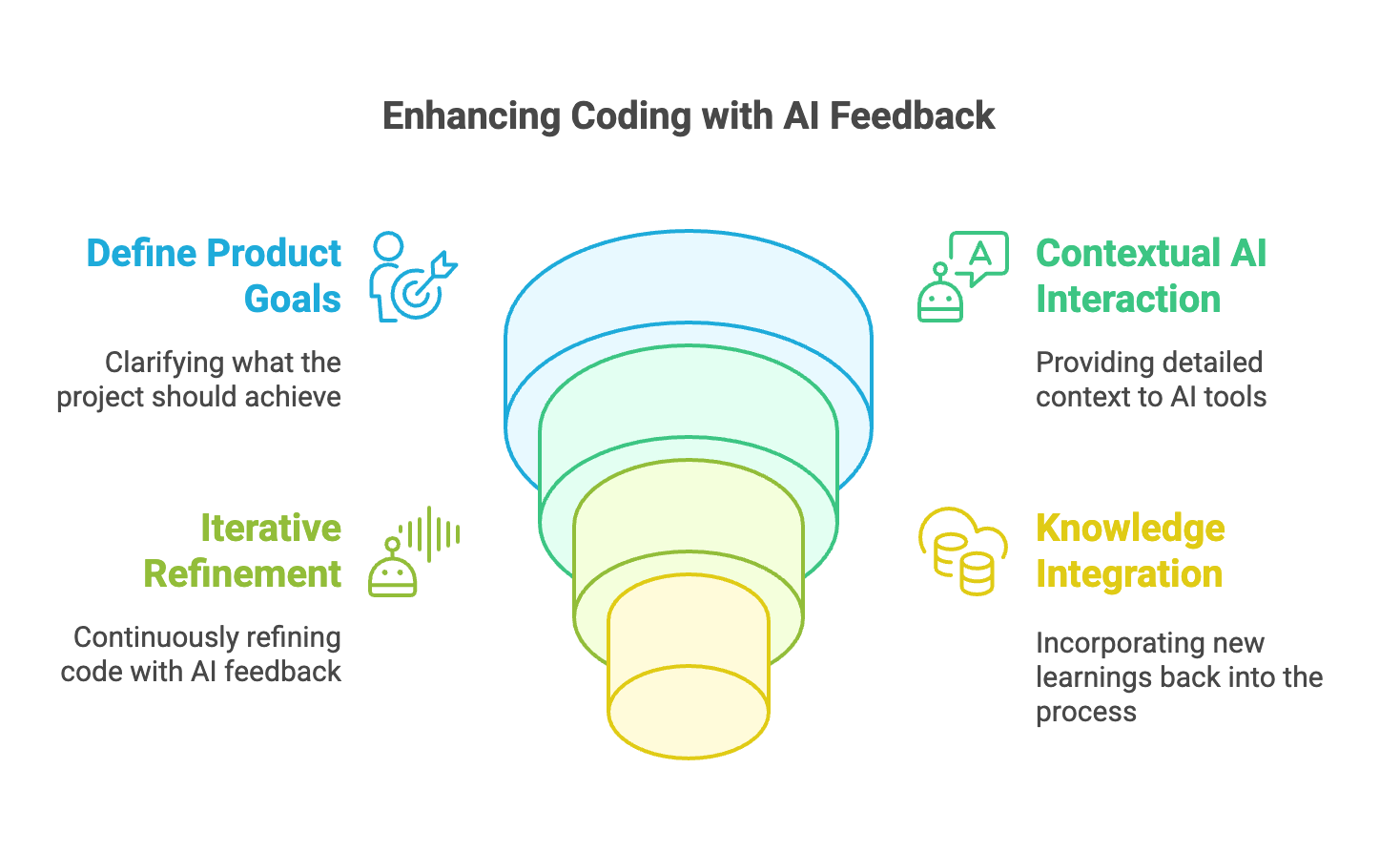

One of the biggest leaps in my workflow has been the integration of AI-powered coding assistants. My approach looks like this:

- Lay Out the Vision Clearly: Before I code, I define high-level product goals—what Pictotales should do, the design approach, and any specific functionality.

- Context-Rich AI Prompts: I provide thorough instructions to Copilot or other AI editors, including references to the existing project structure (e.g., “This is a Next.js + tRPC project” or “Here’s how DSPy integrates with FastAPI”).

- Iterative Feedback Loops: Instead of writing massive blocks of code alone, I iterate quickly with AI suggestions, refining them as needed. This method has helped me learn React and Next.js deeper than any tutorial alone would.

- Continuous Improvement: Every time I refactor code or learn a new pattern (like how to optimize tRPC calls), I share that context back with my AI editor. This leads to better suggestions over time.

For a clearer understanding of how I manage AI editor context, feel free to check out my previous article series.: https://indiehack.in/pictotales-series-2-empowering-your-ai-editor-providing-the-right-context-for-next-level-coding-2/

Part 5: Evolving Development Techniques with AI

From Prompting to Agent-Based Assistance

I’ve noticed that as AI editors evolve, so does my coding methodology. Initially, I wrote detailed prompts in August 2024, telling Copilot precisely what to code. However, we are now seeing more agent-based approaches, where the AI can:

- Run Tests and Fix Them: Provide an instruction like, “Run all tests, identify which fail, and propose fixes,” and the AI can handle much of the debugging automatically.

- Look Up Documentation Autonomously: Some AI solutions can fetch the latest documentation for libraries like React or tRPC and incorporate that knowledge into the code suggestions.

- Adopt Coding Best Practices in Real Time: As frameworks release updates, AI can adapt quickly, meaning you don’t have to comb through release notes alone.

For me, these advances underscore the value of staying flexible. While it’s tempting to rely on older methods, embracing these new AI-driven workflows keeps you at the forefront of rapid technological change.

Part 6: Balancing Prioritization and Adaptability

A Senior Developer’s Perspective

As someone with leadership experience, I’ve learned that prioritization is everything. No matter how advanced your tools, you can’t let feature creep or shiny object syndrome derail your product goals. Three principles guide my decision-making:

- Business Value First: Does this framework or feature move me closer to delivering a valuable product? If not, it can wait.

- Technical Debt Management: Use modern tools, but don’t adopt every new library just because it’s trending. There’s a trade-off between exploring new tech and ensuring stability.

- Iterative Roadmap: Break features into manageable sprints. AI can help you code faster, but it won’t replace sound project management skills—those are still essential.

Part 7: Lessons Learned and Looking Ahead

Reflections on My “Building in Public” Journey

- It’s OK to Feel Overwhelmed: Tech stacks evolve so quickly that feeling lost is normal—even if you’re a veteran developer. Embrace it as part of the process.

- Use Starter Kits to Jumpstart: Don’t waste time on boilerplate. Start with a stable foundation like supastarter for Next.js or a similar solution.

- Leverage AI as a Learning Partner: Tools like GitHub Copilot, Cursor, and agent-based assistants can speed up your learning curve, especially if you provide strong context.

- Stay Flexible and Curious: The AI development landscape will continue to shift rapidly. Remain adaptable and never stop experimenting.

- Prioritize Ruthlessly: Your product’s success relies on building features that matter, not chasing every new library.

Ultimately, building Pictotales has reinforced my belief that a curious mind, combined with strategic decision-making, can lead you to master any new stack—even if you’ve been away from the codebase for a while. My journey is far from over; I’ll continue sharing updates, challenges, and breakthroughs. I hope my experiences inspire you to keep building, keep learning, and most importantly, keep sharing.

Thanks for following along on this “Build in Public” journey. Stay tuned for more insights into my next iteration of Pictotales. If you have questions or want to share your own story, feel free to reach out!