Open AI Operator, the Future of AI-Driven Computer Use, and What It Means for Indie Hackers

In the never-ending quest for efficient workflows and scalable growth, we founders, makers, and indie hackers look to emerging technologies for that next breakthrough. One of the hottest areas is AI-driven computer usage—tools that go beyond chat interfaces to actually do the clicking, scrolling, and form-filling for us. Today, I’m excited to share some thoughts on a fresh release from OpenAI, called Operator, and how it both highlights the potential and exposes the challenges of AI agents in real-world applications. Along the way, I’ll document how these insights factor into my own “Build in Public” journey.

1. What Is Operator and Why It Matters

OpenAI’s Operator is an agent that can interact with the web on your behalf. Instead of just generating text, it navigates websites by “seeing” screenshots and “clicking” or “typing” as if it were a human user. This is done via a novel model called a “Computer-Using Agent” (CUA), which couples GPT-4-level reasoning with the ability to manipulate graphical user interfaces (GUIs). The big-picture promise is to save time on repetitive tasks—anything from booking a flight to ordering groceries to even creating memes—all while companies can offer more engaging AI-driven experiences to their customers.

While the current release is only available to Pro users in the U.S., OpenAI plans to expand it to more tiers and integrate directly into ChatGPT. So for those of us building digital products, it’s worth paying attention to how this technology evolves, because it could fundamentally change the way our customers (and we ourselves) get things done.

2. Why AI Agents for Computer Use Still Face Real Hurdles

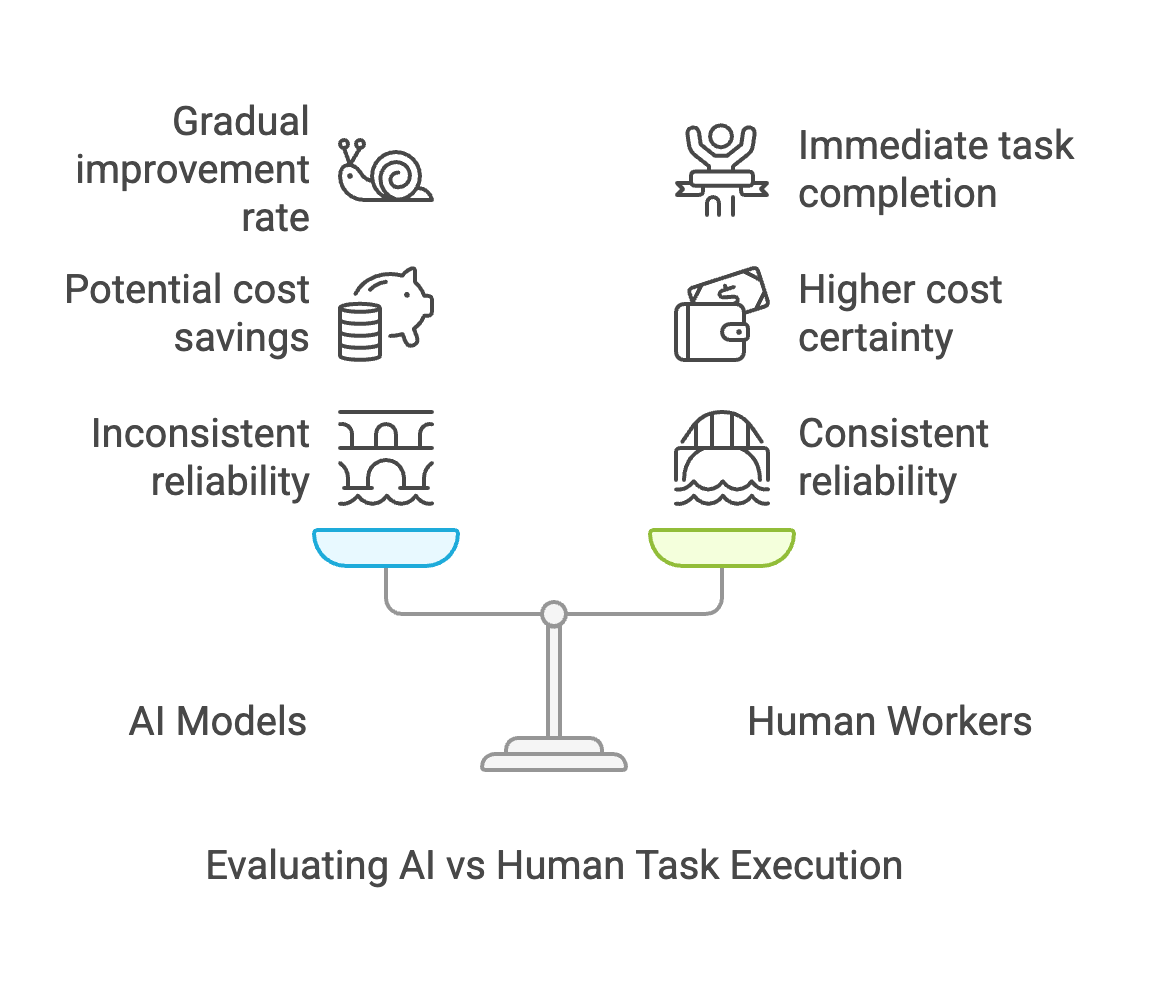

Despite Operator’s exciting premise, it also underscores how current multi-modal LLM technology falls short of seamless, cost-effective computer control. My personal rubric for evaluating AI-based computer operation focuses on three questions:

- Can current models reliably complete the tasks at hand?

- Despite significant improvements in language models and reasoning capabilities, full reliability (like dealing with dynamic web pages or complex forms) is still inconsistent.

- Is the cost of completion lower than paying a human for the same tasks?

- Until AI agents can demonstrate near-zero friction and minimal oversight, many repetitive tasks might still be cheaper to outsource to remote freelancers or performed in-house.

- Will the task completion rate improve significantly within a year?

- Given the rapid progress in language models, we might see incremental improvements—though not always the dramatic leap many hope for in the immediate short term.

When these conditions aren’t met, today’s agents can feel more like demonstrations than production-ready tools. While I’m personally bullish on the future, I acknowledge it might take more time and iteration before such AI-driven computer operation hits mainstream viability.

3. Visualizing the Evaluation Matrix

Below is a simple matrix that illustrates how I evaluate potential AI-driven computer-use products. You could tweak the ratings or categories to fit your own use cases, but this format helps me decide if I’m ready to integrate a new AI solution into my workflow.

| Criteria | High (3 pts) | Medium (2 pts) | Low (1 pt) |

|---|---|---|---|

| Task Complexity | Routine, predictable tasks (filling forms, straightforward navigation) | Medium complexity (occasionally dynamic pages, minor CAPTCHAs) | Complex, dynamic, or specialized tasks |

| Reliability of Model | Consistent performance (<5% error rates) | Some manual oversight (10-20% error rates) | Frequent errors (>20% error rates) |

| Cost vs. Human Labor | Cheaper than human labor (clear ROI) | Comparable cost (limited ROI) | More expensive (negative ROI) |

| Projected 1-Year Improvement | Likely to improve significantly (active R&D, breakthroughs) | Minor incremental improvements (some R&D) | Stagnant, slow progress (low priority) |

- Goal: If the solution consistently ranks in the higher tiers (scores mostly in 2–3 range), it’s probably worth prototyping or adopting. If it sits primarily in 1–2, it might be more of a “wait and see.”

4. The Slideshow Metaphor: A Practical Reality Check

A handy way to visualize the current limitations of AI-driven computer operations is to imagine using your own computer only by looking at slideshows of screenshots:

- You can only see isolated snapshots at a specific time (no real-time mouse tracking or fluid visuals).

- You don’t get immediate feedback on whether your click truly registered as intended.

- You can’t seamlessly adjust your actions if the page updates or if a subtle animation reveals new buttons.

For the AI, it’s a similar situation. Operator “sees” a static screenshot and tries to interpret the next best move. When something changes unpredictably, it’s harder for the agent to adapt as a human would. This is the largest reason many early AI-based “computer operators” can falter in real-world scenarios with dynamic elements. As a result, we see them as “cool demos” but only tentatively trust them for everyday tasks.

5. Holding Onto Hope (Especially for Indie Hackers)

Yet not all is doom and gloom. As indie hackers, we thrive on finding opportunities in emerging technologies before they reach mainstream polish. Operator (and similar tools) can still offer unique advantages:

- Automating Routine Tasks: If you’re a solo founder wearing a dozen hats, even partial automation of tasks (like scraping a competitor’s pricing data daily or restocking from a specific vendor’s site) could be a significant timesaver.

- Boosting User Engagement: If you build a platform or product, integrating AI-driven navigation or quick-checkout features might differentiate you in a crowded market—especially when bigger players haven’t fully capitalized on it yet.

- Low-Code/No-Code Synergy: For makers who don’t want to build custom integrations, letting an AI agent “manipulate” the UI could skip the need for complex APIs. You harness the interface you already have.

The pace of iteration is fast. The leap from static “slide-based” interactions to more adaptive, near-real-time approaches could happen sooner than we imagine, particularly if new research from open-source communities or well-funded labs accelerates it.

6. Putting It All Together: My “Build in Public” Takeaways

In my own indie hacker projects, I’m constantly experimenting to see if new AI tools can give me an edge. Right now, my personal conclusion is:

- Short-Term: Most of these agents serve best as “helper demos” or “curiosity boosters”—exciting glimpses into the future but not always robust enough for a fully automated pipeline.

- Medium-Term: Keep a close eye on improvements in real-time, dynamic adaptation. If the next wave of AI models can track changes on a webpage as they happen, we might see reliability and cost-effectiveness jump to a new level.

- Long-Term: Whether it’s Operator or a similar tool, the capacity to truly navigate and operate digital environments like a human will open doors to new product categories. This could transform everything from e-commerce checkouts to user-generated content moderation.

For fellow builders, the message is clear: evaluate carefully, automate selectively, and don’t underestimate how quickly the landscape can shift.

7. Final Thoughts and Inspiration

The emergence of Operator is part of a broader evolution—turning AI from passive text generators into active doers on our behalf. It’s worth watching closely because, even if the technology isn’t perfect yet, it represents a tangible step toward frictionless, accessible automation for everyone, including the smallest indie teams.

- Keep Innovating: Even partial success can save hours in the day-to-day hustle.

- Stay Skeptical: Use a framework or matrix to assess feasibility; don’t jump in just because it’s shiny and new.

- Hold Onto Hope: AI’s rapidly evolving; improvements might arrive faster than you think, unlocking big potential for those who’re ready.

The path to truly seamless AI-driven computer usage might still feel like operating via a “slideshow,” but each iteration brings us closer to real-time, human-like agility. As we build in public and embrace these iterative leaps, we can shape the future of how we—and our users—work, create, and innovate online.

Thanks for reading! If you’ve got your own experiences (or cautionary tales) with AI-driven agents, let me know. Let’s keep the dialogue going as we collectively experiment, iterate, and build the tools that just might define the next wave of indie hacking success.