Jevons Paradox Meets AI: Designing High-Value Products with Greater Efficiency

How does a technology’s falling cost and rising efficiency affect demand—and ultimately shape the products we build? Jevons Paradox reminds us that boosting efficiency often drives increased consumption rather than substitution or reduction. In the AI world, lower latency and cheaper inference can open the floodgates for new use cases, intensifying total resource usage. Below, we explore what this means for product design, focusing on cost-performance gains, the human experience, and a deeper look at a Product Value formula that can guide our decisions in an era of rapid AI advancement.

1. Jevons Paradox in Tech: Efficiency Begets Consumption

Jevons Paradox originates from energy economics, observing that increased efficiency in using a resource (e.g., coal, computing power) can paradoxically raise overall demand for that resource. Translated to AI:

- Better Model Efficiency

Lower inference costs and faster response times stimulate greater usage: more queries, more ambitious applications, and more real-time AI functionalities. - Lowering Barriers to Entry

As deploying AI becomes cheaper, more indie hackers and startups can experiment, leading to a proliferation of AI-centric products—and a sharp rise in total resource consumption.

Why does this matter? Because cost reductions don’t automatically lead to less consumption; instead, they typically spark new demand. As builders, we need to anticipate scaling demands, potential infrastructure bottlenecks, and the evolving user expectations that arise once AI becomes ubiquitous.

2. AI Performance and the Human Experience

Lower latency and improved performance are vital, but their true impact shines when they enhance the user’s overall experience. A zero-latency model is impressive, yet the user might abandon it if the overall interface, onboarding, or feature design feels clunky. This is where a clear product framework helps bridge performance gains and actual adoption.

Consider these factors influencing user perception:

- Speed vs. Reliability

- Users appreciate quick responses, but repeated failures or inconsistent answers can overshadow mere speed improvements.

- Flow and Context

- Even if your model is blazing fast, ensure users can integrate AI outputs seamlessly into their workflow (e.g., export documents, automate tasks, or make decisions).

- Confidence and Transparency

- AI decisions that explain reasoning or give relevant context can improve user trust. Speed alone, without clarity, can feel superficial.

The upshot: real performance improvements are measured not just by latency and throughput, but by how well they reduce friction, save time, and fit the user’s mental model of how the product should behave.

3. Designing for Cost-Performance Gains: MoE and Knowledge Distillation

Two practical techniques are driving cost and performance improvements in AI, potentially triggering Jevons Paradox:

- Mixture of Experts (MoE)

- Breaks a large model into specialized components (“experts”).

- Routes each query to the most relevant expert, saving compute time.

- Potentially improves inference speed (and reduces cost) while maintaining or enhancing quality.

- Knowledge Distillation (KD)

- Trains a smaller “student” model to mimic a larger “teacher” model.

- Preserves most of the teacher’s accuracy at lower compute cost.

- Makes it feasible to serve large user bases on modest hardware.

As cost and performance thresholds improve via MoE and KD, user demand can surge—embodying Jevons Paradox. However, the opportunity is vast: more affordable AI sparks innovation, from advanced content generation to real-time decision support, elevating the product’s perceived value—if you handle it right.

4. Digging Deeper into the Product Value Formula

When we consider improved performance in tandem with user adoption, we often look to a simple but powerful equation:

Product Value = (New Experience from New Product) − (Legacy Experience from Current Product) − (Switching Cost to New Product)

4.1 New Experience Delivered by the New Product

- Enhanced Performance: Faster answers, near-zero latency, or real-time predictive capabilities.

- Novel Features: Capabilities that were previously inconceivable or impractical.

- Ease of Use: Intuitive onboarding, minimal friction.

4.2 Legacy Experience Delivered by the Current Product

- User Comfort Zone: Existing solutions that are “good enough.”

- Proven Reliability: Users trust their present workflow or toolset, especially if they’ve used it for a long time.

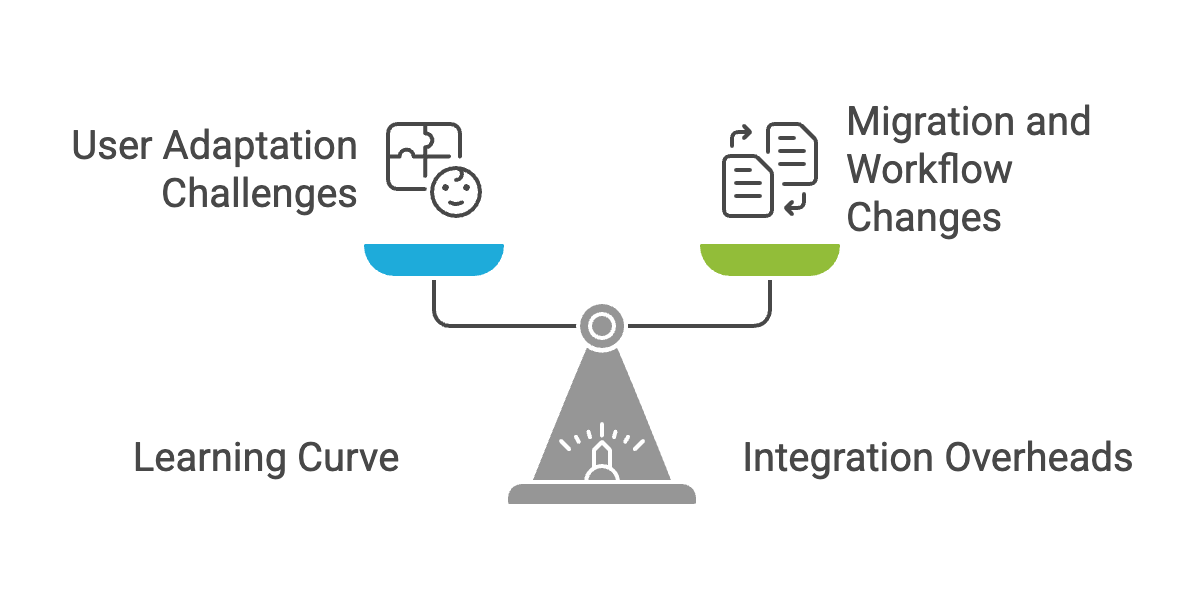

4.3 Switch Cost to the New Product

- Learning Curve: Users must adapt to new interfaces, commands, or usage patterns.

- Integration Overheads: Migrating data, changing workflows, or retraining staff.

- Risk Appetite: Users or teams might fear downtime or uncertainties when switching.

Why Does Better AI Performance Matter Here?

- Higher “New Experience” Score: As your AI becomes more efficient, it unlocks more compelling features (e.g., live summarization, dynamic personalization), raising the “New Experience” value.

- Decreased “Switch Cost”: If your product is so fast and easy that users effortlessly see benefits, the switch cost can diminish (or at least feel minimal).

- Contextual Gains: With improved performance, the reliability gap between your AI and the user’s status quo narrows—helping you surpass the “Legacy Experience” threshold.

In essence, the product’s overall perceived value can skyrocket when model improvements directly translate into user-centric benefits (speed, reliability, new features) while minimizing the downsides of switching.

5. A Glimpse Ahead: CoT Models, Agentic Workflows, and Next-Level AI

As we continue refining model efficiency—whether through Mixture of Experts or Knowledge Distillation—we edge closer to a sweet spot where cost and performance satisfy most users’ real-world needs. But raw performance is only one piece of the puzzle. The next big shift on the horizon involves:

- Chain of Thought (CoT) Models: Large language models (or smaller distilled versions) that can articulate reasoning steps in detail. This improves transparency, debuggability, and trust.

- Agentic Workflows: Systems that break complex tasks into smaller steps, orchestrating them autonomously. By chaining multiple AI “agents” together, you can handle sophisticated processes—from project planning to multi-step transactions—without micromanaging each stage.

Why mention these now? Because once MoE and KD optimize AI to meet basic performance demands, we can confidently layer on more advanced frameworks—like CoT reasoning and agentic orchestration—to supercharge the product experience. We’ll dive deeper into how these models and workflows work, and why they’re game-changers for AI-driven products, in the next article.

Final Thoughts

Jevons Paradox is a powerful reminder that making AI faster and cheaper doesn’t just mean efficiency gains; it can trigger an explosion in consumption and user demands. Balancing that dynamic effectively hinges on:

- Understanding the Product Value Equation: Continually weigh new experience vs. legacy comfort and switching costs.

- Leveraging MoE and KD: Use state-of-the-art optimizations to keep performance high without bankrupting your infrastructure.

- Preparing for Advanced Workflows: Once basic performance thresholds are met, there’s vast potential in chain-of-thought reasoning and agentic orchestrations to elevate user satisfaction.

Stay tuned for our next deep dive: how CoT models and agentic workflows can transform AI products once we’ve reached cost-performance parity with user expectations. The future lies in holistic user experiences—beyond raw performance metrics, into the realm of seamless, intelligent, and trustworthy AI interactions.

Got questions or ideas?

- Feel free to share your thoughts on how you’re balancing cost, performance, and user experience in AI-driven products.

- Stay connected for the upcoming blog post on CoT models and agentic workflows—the next frontier of AI product innovation!