Generation of Generation: The Reverse Engineering of LLM Mastery

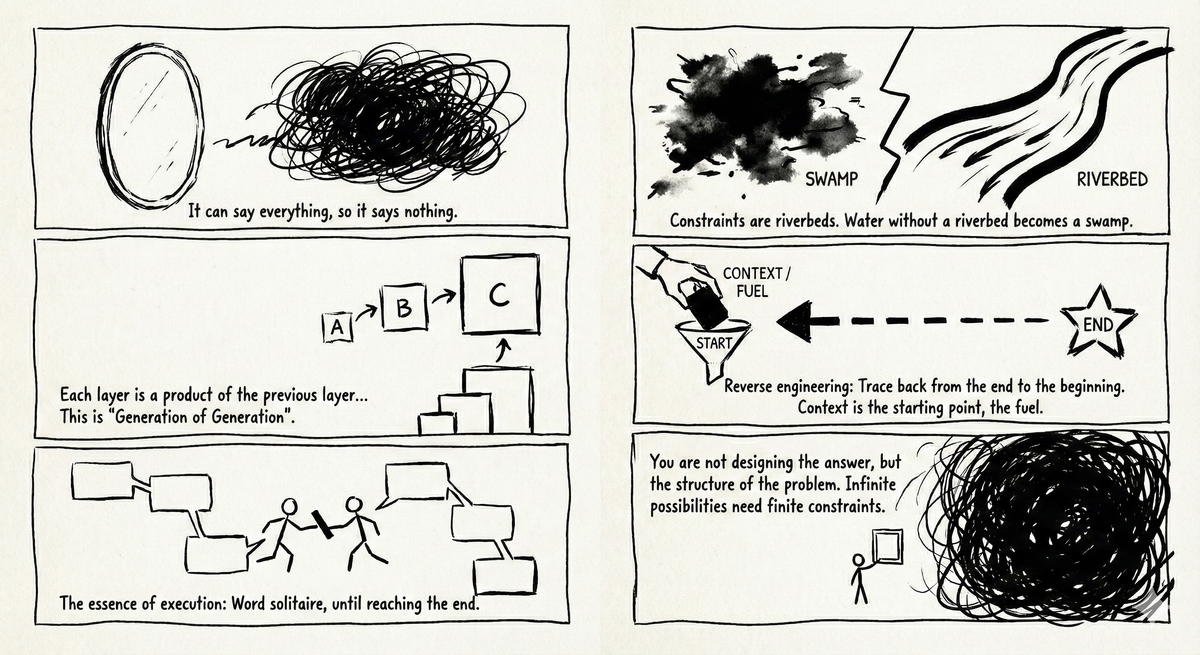

Large language models have an essential characteristic: they can say everything, so they say nothing.

This is not a flaw—it is by design. To comprehend patterns across all human language, the model must become a mirror, reflecting any possible input. Generality and generalization are its conditions for existence. But this also means when you ask it to “write me an article,” it faces infinite possible paths. It can go anywhere, so it goes to the most mediocre place—the average of all directions.

Constraints Are Goals, Goals Are Freedom

Here emerges a counterintuitive phenomenon: the more constraints you give the model, the freer it becomes.

The rise of agentic architecture is no accident. When we hand the system goals, steps, and checkpoints, the model transforms from “can say anything” to “say things in this direction.” Constraints are not chains—they are riverbanks. Water without banks becomes swamp; with banks, it flows to the sea.

But this raises a deeper question: where do the riverbanks come from?

The Method: Generation of Generation

The answer hides in a recursive structure.

You want the model to generate an article. But that article does not leap from void. It is the final crystallization of a chain of intermediates: first an outline, the outline generates paragraphs, paragraphs generate sentences, sentences generate words. Each layer is the product of the layer above, and the generator of the layer below.

This is “generation of generation.” You are not designing the final product—you are designing the generation path.

Once you understand this, your thinking must invert. No longer ask “what result do I want,” but “what process produces this result.” No longer design the product, but design the source of the product.

Reverse Engineering: Tracing Back from Endpoint to Origin

Since the final output is the result of a chain of generations, the real work is decomposing this chain.

Imagine you want a research report. Step back once: the report comes from analysis. Analysis comes from data organization. Data organization comes from search results. Search results come from problem definition. Problem definition comes from—your initial prompt.

Every node in this chain is one generation. Your task is not to write the report, but to design this chain. Design it well, and the report grows itself.

So what is the origin of the chain?

Context Is the Origin

The origin is context. The initial framing you provide determines the DNA of the entire generation chain.

But context is not given arbitrarily. You must know what frameworks this domain requires, what terminology, what paradigms of thought. Domain knowledge is the fuel of context. Without fuel, the model can only fill gaps with general common sense, and the result is mediocrity.

This is why experts using LLMs far outperform novices. Not because experts know more complex prompting techniques, but because experts know what to inject at the origin. They know which constraints are meaningful, which directions are worth exploring.

Word Relay: The Essence of Execution

When the origin is set and the path decomposed, what remains is execution.

The essence of execution is simple: word relay, until the destination is reached.

Each generation by the model continues the previous output, baton after baton, until the goal is achieved. This is when agentic architecture reveals its true value—it does not make the model smarter, but gives the relay race order. Each leg knows where it receives the baton and where it must run.

Conclusion: You Design Not Answers, But the Structure of Questions

Those who master LLMs do not ask better questions—they design better question structures.

They walk backward from the endpoint, decompose every link in the generation chain, then inject the right context at the origin. After that, they simply press start and let the word relay unfold.

General models need specific paths. Infinite possibilities need finite constraints.

This is the working method of the AI era: no longer directly manufacturing products, but manufacturing the process that manufactures products.