Fast, Smarter, and Under 500ms—Achieving Real-Time AI with Reflection

Introduction

In the first article, we unpacked how CoT reasoning and agentic workflows can dramatically raise the bar for smaller models. Now, let’s talk about something just as critical: speed. If your AI responses aren’t delivered quickly enough, user experience can suffer. And if you’re aiming for a product that feels as smooth as a human conversation, the technical challenge intensifies.

This article discusses human latency expectations, how to keep reflective AI under that crucial threshold, and why specialized chips—like those from Groq—are game-changers for high-speed inference.

1. Human Reaction & Latency Expectations

A widely cited figure for “comfortable” human conversational latency is under 500 milliseconds. Beyond roughly 1.5 seconds, the conversation feels choppy or unresponsive; under 500ms, it starts feeling nearly instantaneous.

- Every extra step counts: Each reflection or external tool query adds overhead. Even a small model can stall if it’s repeatedly calling an API or generating extended CoT.

- Speech-to-Text and Text-to-Speech: If you integrate voice I/O, factor in those transformations too.

If your system can consistently respond in under half a second from audio in to audio out, users perceive it as truly “real-time.”

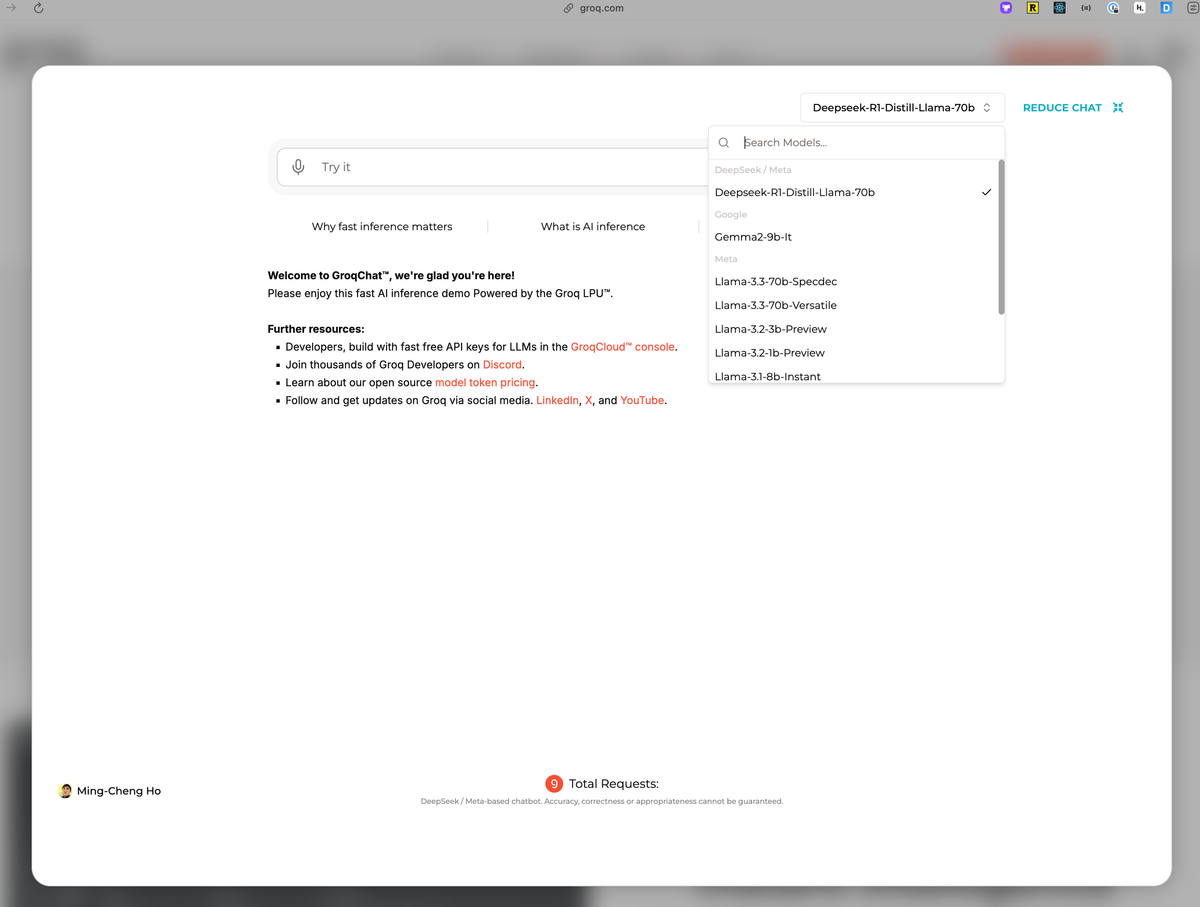

2. DeepSeek R1 Distill Llama 70B + Groq: A Low-Latency Collaboration

DeepSeek R1 Distill Llama 70B is a distilled 70B-parameter model that maintains most of the performance of the original large model but at a fraction of the size. In parallel, hardware accelerators like Groq can produce inference speeds of up to 275 tokens per second (or more, depending on configuration).

- MoE + Distillation: The design of DeepSeek R1 ensures the model doesn’t bloat with unnecessary parameters, optimizing memory usage and speed.

- Groq Hardware: By focusing on throughput, Groq chips handle large-scale matrix operations with minimal overhead. This synergy can make multi-step reflection flows feasible, especially when you keep the iteration count modest (say, two or three reflection steps).

3. Designing for Iterative Reflection Without Slowing Down

To benefit from reflection but keep speed up, you need to systematically manage how many iteration loops your model runs:

- Limit reflection steps: In many tasks, two or three cycles of reasoning and observation provide a big jump in correctness without too much slow-down.

- Use a threshold: If the model’s confidence passes a certain threshold (or if the reflection step detects no contradictions), skip further loops.

- Optimize each step: If you’re using external APIs (like web search), cache results to avoid duplicating lookups in repeated agentic loops.

Even if you can’t always stay under 500ms, aiming for a user-perceived sub-second response is still a big improvement over multi-second lags.

4. Beyond Tech: Crafting a Great User Experience

- Set user expectations: If your system occasionally takes 800ms to provide a more accurate answer, highlight the trade-off (faster answers vs. higher accuracy).

- Design for partial progress: Consider showing a quick partial response or a “thinking…” indicator if reflection steps might take longer.

- Measure carefully: Collect and analyze logs for each reflection step. Track where the system slows down, and optimize the most time-consuming loops.

5. Leading Your Startup with Speed & Transparency

From a leadership or “build in public” standpoint:

- Show your iteration: Post benchmarks and improvements over time. Early adopters love seeing the behind-the-scenes data.

- Engage your community: Let them influence how many reflection steps you use by giving real feedback on response times.

- Keep refining: As specialized AI chips evolve and new distillation or MoE methods appear, your iteration strategy (and your public stories about it) can keep pace.

Conclusion

With the right hardware, well-structured reflection loops, and a tight focus on user latency expectations, you can build an AI product that’s both smart and fast—even using a smaller model. DeepSeek R1’s combination of MoE and distillation provides a great blueprint, especially when paired with high-throughput acceleration from platforms like Groq.

Ultimately, the goal is to meet (or beat) human reaction times of under 500ms whenever possible. That level of responsiveness not only feels magical, but also meets a fundamental user expectation for real-time interaction. By balancing multi-step reasoning and performance optimizations, you can deliver an exceptional product experience that stands out in any market.

Final Thoughts

This two-article series underscores an emerging AI trend: you don’t always need the absolute largest model to deliver powerful, near-real-time intelligence. Through reflection (CoT) and Agentic workflow, smaller—and more cost-effective—LLMs can reach surprisingly high levels of accuracy. The key is designing your workflow to keep latency under control, which may mean leveraging specialized hardware and capping reflection steps to a sweet spot of speed vs. quality.

For indie hackers, founders, or creators “building in public,” it’s an exciting time to experiment with these approaches. Not only does it differentiate your product, but sharing your journey fosters community trust and can drive powerful open-source collaborations.